AI hype relies on "demo world": promises of a future just out of reach that requires more data than humanity has created and more energy than the planet produces. The cost of getting there is everything, and we can't afford it. AI can automate mundane tasks and analyse structured data, but it cannot create meaningful content that connects emotionally with humans. It optimises towards perfection, removing the rough edges that make creative work interesting.

One of the driving factors behind me wanting to write on these subjects is the hypergrowth of speculative investments in technology, and the almost inescapable hype-cycle that creates. If you thought tech like Augmented Reality apps was overhyped, you were in no way ready for the lofty hype of AI. I’m not going to attempt to recreate the stellar work of other journalists covering this topic, but I do want to give credit to each of them here as their willingness to investigate has helped us to pull apart innovation from boosterism.

Firstly, thanks to Molly White. Molly’s coverage of Web3 (for those unfamiliar, think NFTs, the Metaverse, Blockchain, Crypto) in Web3 is Going Just Great has been exceptional. You can also get her newsletter at Citation Needed. It’s a strong recommendation from me.

I also want to credit Paris Marx and the work he’s done on Tech Won’t Save Us. Marx has covered, extensively, Silicon Valley’s movement from business into politics, where very large tech businesses are trying to force commercial frameworks onto a population that largely doesn’t want them and will not benefit from them through political means. Think about Elon Musk cosying up to Trump in order to get various lawsuits against him dropped and get exclusive access to contracts. That kinda thing.

I’d be remiss if I didn’t mention Ed Zitron. Ed’s work on Where’s Your Ed At frequently acts as a refreshing counterpoint to the traditional narratives you might read in more mainstream press coverage. Ed also does Better Offline, a podcast on the same topics. Challenging the hype and dogma around AI.

And finally, author and blogger Cory Doctorow. Cory runs craphound.com and has published numerous books on tech, politics and society. He’s perhaps best known in most circles for coining the term “Enshittification” which describes how tech platforms have to sabotage everyone’s experience but their own in order to take a larger share of profits year-on-year.

Now those credits are out of the way, on with the article. I wanted to write about my own experiences with AI and compare that to how it’s sold to us. I haven’t quite managed to condense it into exclusively pithy axioms yet, but I have a few thoughts that I think are essential to understanding the role AI will play in our lives over the next 10 - 15 years, after the hype cycle dies down.

Firstly, I think we must reject the premise of what I have heard described as “demo world”. One key affect of how all tech boosterism presents itself, and that includes AI, is that it always has to promise a future that’s just out of reach. The dollar-on-a-string that if we just invested a bit more, if we just made this technology a major part of our lives, if we just reskilled and retooled and rewrote everything then the tech utopia that can 10x production and lift GDP to stratospheric heights could finally be realised. It’s patently fictional. We have to understand that a large number of these businesses are just trying to appeal to a very, very small investor class and generate enough hype to attract various funding rounds to ‘scale up’ but if you talk to AI engineers and people working in the field who aren’t trying to attract VC funding they’ll be quite open with you about what would be needed to deliver this. More data than humanity has ever created. More energy than the planet currently produces. All at a cost low enough to meet a price point that would allow mass adoption and would be cheaper than hiring enough labour to do the same thing. The promises are huge. A world without humans in it, where it’s just machines talking to machines. And all it will cost us to get there is everything.

Reject demo world when it’s presented to you. None of us can actually afford to get there.

Secondly, I think AI is frequently positioned as something that can create engaging and meaningful content. I do not believe that to be true. I think it can automate mundane tasks. I think it can draft emails that you never really wanted to write. I think it can analyse data within a table and tell you interesting relational things about that data. I think it can format text. I don’t think it can create meaningful content that connects emotionally with humans. It’s probably perfect at creating fodder that never really mattered anyway. For example, if you need a music track for a commercial about a hair loss cream for a 15 second advert, it’ll do that just fine. The standards were low to begin with. That song was never going to touch the human heart. Mark Ronson isn’t going to be listing the hair loss cream advert song as one of his favourite songs on the inevitable episode of “I love the 2020s” that will exist one day. It was just background noise that was needed to meet the form expectations of advertising. It might be able to produce advertising assets even, though I’ve yet to see this done well. It almost always is noticeably AI and that erodes trust.

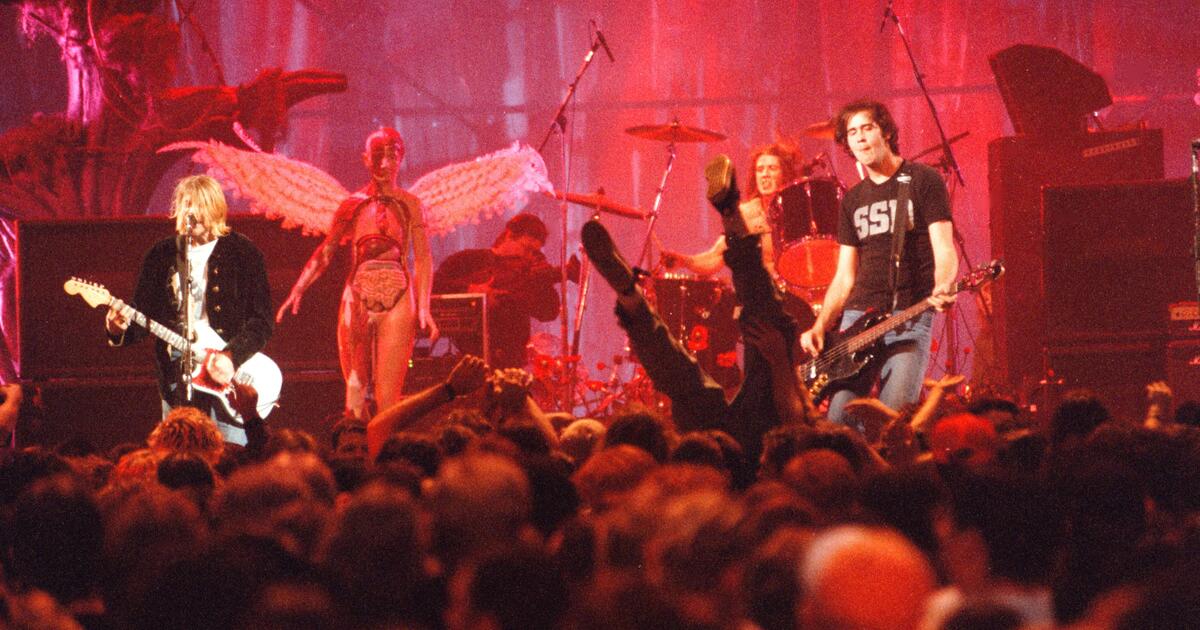

I also think there’s a common misconception about what AI is being built for, vs what people value about creative work. AI builds for perfection. Even aberration and flaws have to be generated predictably via a pattern. There’s no way to generate rough edges with an AI and the rough edges are as much a part of finished work as anything else. There’s a conception that we’re optimising our way towards the perfect 4 minute pop song and if we can just capture enough data about all the other pop songs and smooth off the rough edges, we’ll have somehow found the perfect form. Yet when we do that in practice we find that we’ve removed the most interesting parts and produced a dull parody of art. I wonder what techs punk moment will be, when the establishment and endless reproductions of norms constrained by the predictive-graph framework of AI becomes stagnant and prompts a radical departure to something raw, imperfect and yet beautiful.

Do I think all AI is bad? Not really. I have real concerns over its environmental impact, especially the models proposed within the US which require huge amounts of new infrastructure just to deliver them. I think it’d be a very sad world indeed if we could deliver privately owned nuclear power when it’s used to write emails we don’t want to write, but we couldn’t do mass transit through trains and trams, couldn’t do low cost energy for all, couldn’t do housing and end food poverty to improve the lives of everyone and create a fairer society.

Some of the models coming out of DeepSeek seem promising for their dramatic reduction in energy consumption. Crack that with nuclear power and renewables that are owned publicly and that doesn’t require so much cooling to operate and I think I’d probably be far less concerned about the impact on the environment.

I have concerns about what it might do to society and communication. I have a dystopian vision of a future where somebody asks an AI to turn “sorry but I need more money” into an email with 1000 words because that’s the form expectation, then the receiver asks an AI to summarise it for them and turn it back into “sorry but I need more money”. What a horror that would be.

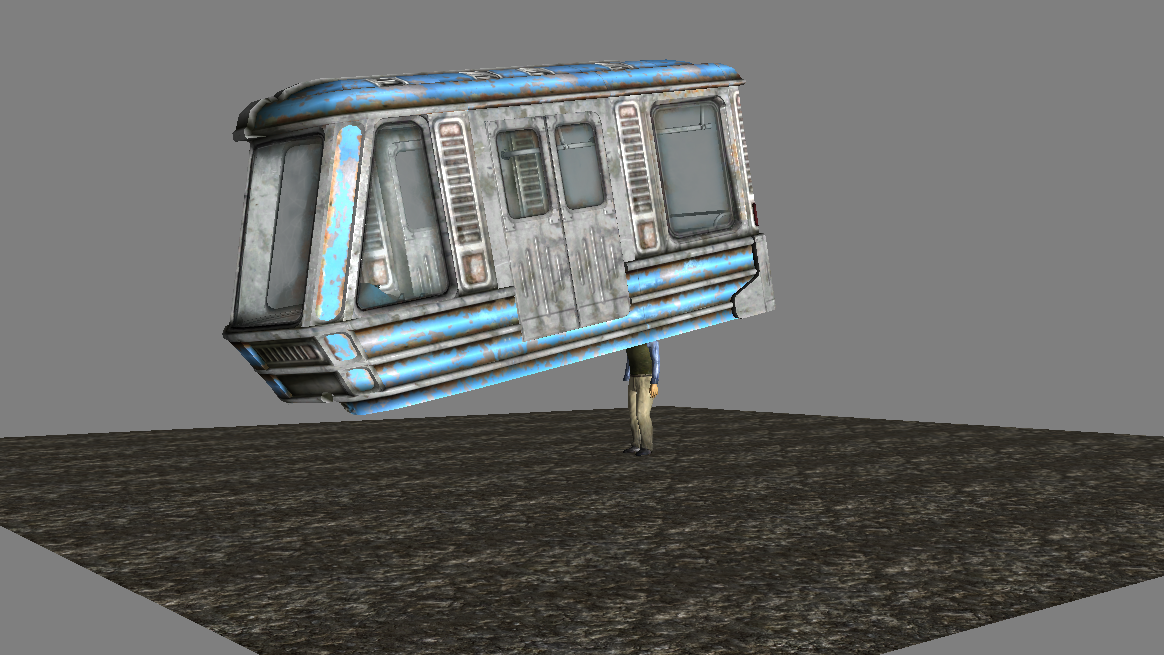

Where I do believe there’s a relatively decent future for AI, provided the energy and environmental needs can be met, is in data models with huge datasets or to provide natural language interfaces quickly on data that doesn’t have a consistent structure or schema. There’s also probably some possible benefits to things like the coding of websites and apps where it’ll put less of a burden on developers to memorise syntax and use cheat sheets. For the real sophisticated apps though, you’ll need talented human developers because that was never about raw code production, it was about clever solutions to complex problems. I’m reminded of an example from early video game development. In Fallout 3's expansion Broken Steel, the engine that runs the game had no real capability to create vehicles. They wanted a scene in the game where you the player ride a train to get to a new location. There's no way for the engine to create a train system, but it was an essential plot beat where you reactivate a rail network and then take the metro.

They solved this problem by building a train carriage as a hat, and putting it on the head of your character. You pull the lever to start the train, the existing train model despawns, the train hat is placed on your head and your character then walks (quickly) on a path but this whole scene is sufficiently disguised in such a way that the player would never notice.

There's other examples of this being done in video games too. Some tables in Skyrim are just the top 1/4 of bookshelves that are clipping through the floor. This means you get 2 assets for the price of one for room decoration.

This is the real genius and artform of the talented developer. Understanding the problem and the toolkit so well that you can build elegantly inside of it. You only get those talented developers through the path of them being a junior developer once. If we farm the junior development work out to AI every time simply because it’s cheaper to do so, we lose those senior developers in a decade or two.

It’s clear to me that whilst the use cases for AI do exist and adoption has happened to some extent, particularly within teams with heavy constraints already, we’re far from there yet and I don’t know if we’ll ever get there.

Plus, in a time when platforms themselves are consolidating and trying to become ‘clouds’ full of tools all belonging to a single ecosystem, does this simply build even more monopolies to be locked into and pricing tiers that keep spiralling upwards whilst scaling service downwards to satisfy the demands of VCs who made speculative bets on overvalued companies for billions of pounds?